This is a document I posted on the Web (my personal web space) in the year 2000 where I made certain predictions and suggestions. Well, many have come true and some have not come true...yet. Maybe the right people read it back then and made it a reality. If so, I certainly got no credit for it or money for that matter. I know that I only have my word, and you can believe it or not, but it is the honest truth.

Re-Thinking the Internet, Convergence and Security

©2000

This document outlines my opinions and thoughts of where the convergence of different technologies should and very well may lead.

There are a sundry different opinions, including mine, from different "visionaries", technical specialists, industry leaders and third-party manufacturers of software and hardware, of where all our media technologies will find themselves in the new millennium. The common goal, is to "converge" all existing media into one centralized, globally accessible, user-friendly, secure and reliable system that will provide access to any information on demand. Unfortunately, there are innumerable proprietary solutions to the convergence problem. Everyone has their own ideas (and I'm no exception) as to how to achieve total integration of all our existing media (voice, audio, video, data, print and input devices). There are two things that must be decided before anything else.

First, what will centrally control all of the media devices that exist (or will exist)? As far as a centralized piece of hardware to control all of the external devices is concerned, the PC is the definite choice. It already has the "brains", flexibility of adaptation (due to software), intense development focus, market acceptance and widespread usage necessary to ensure an enduring and evolutionary future.

Second, what media and transport can carry all the information quickly, securely and reliably across the globe. In terms of access to information, world-wide connectivity, ease of accessibility, established and reliable infrastructure, ever-increasing popularity and user base, the Internet and Intranets are the only choices. The Internet is fast-becoming the global infrastructure that will link all developed and developing countries into one large world-wide economy and database. The only set-back is that it lacks structure and standards.

TRANSPORT MEDIA

Cellular and Satellite Technology

Based on these two primary criteria, a few things come to mind. First, electronics manufacturers of external media devices and home appliances should conform to some existing PC standards and protocols. USB ports, for instance, as a means of interfacing desktop computer peripherals and cellular-based technology as a transport medium (Transmitter/Receiver) for long- or short-distance remote control of home appliances and other devices (no more wires please). Cellular technology is rapidly evolving and chip scale-integration is increasing. Manufacturers can build less complex and thus, less expensive products and integrate a hardware solution (a chip) directly on a PC's motherboard, since the PC will be doing most of the work.

Another positive note for Cellular technology is the portability. A user can be anywhere in the world, in a car, plane, train, restaurant or overseas office and instantly connect to the internet by plugging in his/her portable phone into a laptop. This practice is now current but slow. With dynamic bandwidth allocation (according to the number and type of connections) and a static route for each connection, the user can also receive or initiate a voice call while connected to the internet. Voice, Video and Audio should be treated differently than data because we can transmit data in packets without loss of information or coherency due to latency (pauses that occur during transmission or retransmission of packets that were lost) because data (text, static images, etc.) is not real-time critical. However, real-time dependant information (audio, voice and video) has to be able to "stream" to and from the user without interruption. One dedicated, high-priority Static Route should be established for these media types only.

A flag or "timing clock" embedded in the TCP/IP data stream or header can be used to synchronize images with audio and virtually eliminate latency. When a user transmits from his/her PC, the video, audio and static images taken from the Data network or applications on the transmitting PC can then be recombined (buffered or delayed as needed) using the timing clock to eliminate any latency incurred during transmission. Windows media player and RealNetwork’s RealPlayer already do this. The latency will still exist except it will be transparent to the user. The real-time channel should not incur much variance in latency during transport because of its Static Route and therefore, mainly buffering of the signal will occur. Each home user will be his/her own Gateway. xDSL technology and Fibre Optics have sufficient bandwidth to allow transmission of the real-time media types through that transport. More on this in the next section. Cellular (wireless) technology can be developed enough to have sufficient bandwidth for all but the most demanding media (at present) but has the benefit of being able to transmit to an antenna to access the POTS (Plain Old Telephone Service) and has the capability (with some development) at the same time to connect to a satellite. Therefore, at the mobile user end, one Cellular channel provides a connection to the ISP and provides a link to the POTS and another Cellular channel provides the connection to the Data Network (Internet) via antenna or satellite. Advances in FDM (Frequency Division Multiplexing) and techniques for better Bandwidth efficiency and data compression give promise to this transport. DTMF (Dual Tone Multi-Frequency) encoding or other signalling method, can be used to control real-world devices (TV, VCR, etc in the living room) besides placing a regular voice-call. In the future, a user will probably have a satellite receiver/transmitter mounted on his/her roof for home use and a cellular technology-based chip built-in to his/her laptop for mobile use.

Satellite dishes at the Central Offices can provide transmission in the tens of Gigabits/sec to geostationary satellites that would relay the information around the world. I think of the Iridium series of satellites; being already in place to accommodate transmission. Iridium is a constellation of 66 geostationary satellites encompassing the globe. Strange, but no one seemed to want to purchase the satellite array after the company filed for bankruptcy. Just recently, Craig McCaw, a billionaire, who has a venture capital company called Eagle-River Investments LLC made an offer to purchase the doomed Iridium satellite constellation at a bargain price. Bill Gates of Microsoft, a buddy of McCaw, had also shown interest in the venture and was ready to pump a billion or so into the company. Not long after the announcement was made public, McCaw changed his mind and decided not to bail out Iridium. But McCaw’s interest in a satellite constellation to provide global communications hasn’t diminished. He also has another satellite company called Teledesic (http://www.teledesic.com). He recently announced that he would bail out ICO Global Communication (http://www.icoglobal.com), yet another satellite company in bankruptcy. McCaw announced that he will be delivering a Fibre Optic QOS "Internet in the Sky" by 2004. Specifications include a bandwidth of 60 Gigabits/sec. Much of the world's communications are relayed via satellite already including some Internet communications. As a result, we may consider the satellite as the convergence point already. With Satellite-to-Satellite communications, a more uniform latency is experienced (more direct transmission path) and access to the world's communications are as simple as tuning into the right channel(s). The entire signal, including real-time data and static data, could be buffered equally and then delivered in one simultaneous stream directly from the satellite to the user. This would avoid the twisted and uncertain paths of ground-based networks. A user can connect via cellular and get the benefits of wireless and wired transport. Another advantage is the wireless control we can have over our home and appliances. Also, other data and technology is made accessible via satellite which is virtually unavailable otherwise such as real-time GPS data, TV from all countries, aerial photography… and the list goes on.

Fibre Optics and xDSL Technology

Another transport alternative would be Fibre Optics. Because of its inherent large bandwidth and low noise, fibre seems to be a logical choice as a converged transport medium. Most of the telcos have the infrastructure in place due to ISDN but the development focus has shifted away from Fibre Optics in favour of newer technologies such as xDSL. Cisco, after some puzzling purchases a while back, is betting on Fibre Optics. Development of new Multi-Gigabit Optical switches by NORTEL and others are adding to the momentum towards Fibre Optics.

A high quality Fibre Optic conduit operating in mono-mode can theoretically carry information equal to the frequency of the transmitting laser. A 780nm infrared laser with a frequency of 3.85 x 10^14 Hz could theoretically carry 385 Terabits of information per second! Assuming 1 bit per cycle that is. We know that at least 2 bits per cycle is possible, which in effect, would double the number. Excimer lasers (ultra-violet lasers) have also been developed with the potential to increase the limit another 100 fold. These lasers though, would perhaps need slightly different optical conduits engineered for the new wavelength. Furthermore, industry may choose to use the Excimer lasers only on the high-traffic backbones.

This transport method is perfect for home or stationary use because the connection must be physical while Cellular or Satellite technology is perfect for mobile use. The introduction of new WDM (Wavelength Division Multiplexing) technology by Ericsson, and proprietary techniques by others, promises to increase bandwidth for fibre optic backbones.

MARKET OUTLOOK

In summary, all of these technologies will be intensely developed within the next few years. New Fibre Optic routers/switches using the newly developed high-speed Optical Switches will quickly be implemented on the Internet backbone but high-speed FDDI's will not be widely available to consumers. There is too much money invested in xDSL and the anticipated return on investment hasn't yet been realised. xDSL becoming more widespread and within consumer reach, will be further developed by Telco's to grab the high-speed market now.

Cable will prevail in areas where xDSL is not yet available provided that a highly visible ad campaign and aggressive market strategy is put into action quickly. In any event, cable's limitations are far greater than that of Fibre so at some time in the future they will grind to a halt. This of course, unless they refit their entire network with Fibre Optics which will take time and cause many inconveniences to customers causing a slight percentage shift over to xDSL due to frustration.

Cellular technology (wireless) requires a good deal of development and an economically affordable solution for widespread acceptance by consumers and be able to compete with cable and xDSL in the short-term. However, cellular technology will find niche markets in remote areas where cable and telephone is not available and slowly expand from there as development accelerates. Unfortunately, it will struggle to capture the established, mainstream markets in metropolitan areas. Cellular has an advantage when it comes to user mobility and a truly global infrastructure with low maintenance costs if Craig McCaw et al. can deliver on Teledesic. McCaw is not offering Teledesic to the public until he gets the system on-line in 2004. It maybe too late by that time unless he has a number of large backlogs of contracts to stay afloat until he can gain investor confidence and consumer acceptance. The reason is that there is too much development focus and investor support in Fibre Optic technology and new advances are being introduced almost monthly. McCaw's advantages are dwindling daily and unless he makes the move very soon, he will spend a lot of time and money trying to keep up to, or surpass Fibre. This is the only way he can turn the world-wide development focus away from Fibre and into satellite. SO, either he must go public right away or move the deadline much closer. Actually, a combination of the two would be best unless he intends to take the backseat and become a global telecom outsourcer. If he does everything right, then, in the long-term, Cellular-to-Satellite communications will become the popular choice for both mobile and stationary communications as well as remote control. If development shifts to satellite technology, a large bandwidth would soon be available to all consumers, whether mobile or stationary.

As mentioned before, McCaw's plan will succeed if he brings it to market fast! Ericsson has introduced the ERION system into Canada, which uses WDM Technology (Wavelength Division Multiplexing). The Technology, permits transmission of 1 million phone calls over a single optical pair and supports TCP/IP, ATM, SONET and other popular protocols. Rogers Cable Inc., owned by AT&T is the first to deploy WDM and as a result, Rogers now has the most powerful transport based on bandwidth. The attempted merger between Rogers and Videotron in Canada, would have been scary for Telco's such as Bell Canada. Videotron is a Quebec-based cable TV programming and services provider (Pay-per-View, videotex information, Internet via cable and modem) who has a substantial subscriber base. They also provide services into the province of Ontario. Furthermore, their cable-based Internet services are available in areas where xDSL technology is not. Videotron uses a hybrid system of coaxial cable to subscribers with a Fibre backbone. The cable-based Internet services provided by Videotron guarantee a worst-case bandwidth equal to the best-case of xDSL service provided by Bell (in theory). Bell Canada, always looking at the short-term goal, decided to concentrate on xDSL development instead of ISDN or Fibre development. Anyone who truly understands physics would have told you the way to go is Fibre Optics. xDSL was chosen because it required minimum modification to the existing infrastructure ($$$). Furthermore, Bell is not fast enough in deploying new xDSL technology (Giants move slow). They are still offering 1 Megabit xDSL modems to subscribers when 7 Megabit models have been developed. Although, just recently, 1.5 Mbps modems from Alcatel have started to be deployed to new subscribers. Worse yet, the infrastructure modifications needed to support residential xDSL technology are moving along too slowly. They do not want to make the investment unless they are sure of a quick return which opens the door for cable.

With Bell Canada’s reputation for laying off staff, subdividing departments into separate companies to eliminate benefits and unions and by restricting wage increases to cut costs, does not make for productive, and motivated employees. Needless to say, these policies do not attract top notch people, or retain knowledgeable, experienced staff either. So the collective "drive" of the company is beginning to disappear. This short-term profit-focused intelligence will, and probably already has, cost them a great deal. You get what you pay for.

Enough digression. The only way they can recuperate, is to extend their infrastructure further than the “Kilometre limit” before cable gets there. Besides this, they must make the necessary modifications to the existing infrastructure at the same time! Bell, it seems was ill-advised to go with xDSL. The potential, as anyone who understands physics will tell you, lies in Lasers and Fibre-Optics. If fibre wins, watch for the next generation of lasers and fibres, to again increase the bandwidth. That is, Excimer Lasers as the transmitting source and perhaps, quartz as the fibre optic material. Since TIR (Total Internal Reflection) is the method for infrared lasers, it may also work for Excimer lasers. In this case, the only upgrade to the network necessary would be the lasers themselves. A little "pop" from a big gun came when Bell acquired Teleglobe and the CTV Television Network about a week after the announcement of the impending Rogers/Videotron merger (which never went down). This seems more like retaliation and saving of face more than a strategic business move.

In conclusion, the winning technology will depend on timing and Industry focus (as always) and not necessarily the technology with the most promise. The quest is for ever-increasing bandwidth and it seems that Fibre is winning that battle. Telco giant Bell Canada can't keep up with the demand for bandwidth because there are distance considerations involved for residential xDSL service and its inability to move to market quick enough. Coaxial cable used by Videotron can deliver very high bandwidths with no modifications to subscriber lines and through the same cable, a user can get AM and FM radio, TV, videotex services and pay-per-view. Personally, I think that Videotron’s refusal of the merger offer was a BIG mistake. With the implementation of ERION using WDM Technology, they could have upgraded their infrastructure's capacity and could have certainly offered many more cable-based services. Perhaps a cable-base phone! Rogers, a cellular and cable services provider, has a good share of the cellular phone market and definitely Wireless can, in the long-term, win over all.

MAJOR KEY ISSUES

A great deal of issues have been raised recently regarding privacy, spying, viruses and malicious attacks by users and even corporations on the internet. I have heard of no concrete solutions to these issues from any government organizations, hardware and software manufacturers, user groups, corporations or the IETF (Internet Engineering Task Force). Why not? Because there is too much to gain in gleaning private information from you and me. Advertisers pay big bucks for surveys to find out what you watch, read, eat and drink in order to make effective campaigns to sell you products and incite you to consume. It has always been difficult and costly to obtain this information, until now. The software you use every day, without your knowledge, can transmit your personal information to corporations or individuals over the internet. Javascript and VB script for instance, were supposed to make your internet experience more enjoyable; and they do. But these scripting languages are also the root of personal information leaks and very malicious viruses to mention only two.

Basically, all manufacturers and internet service providers leave it up to the users themselves to protect their information. The problem is that most people are not computer literate enough to do what is necessary to protect their privacy. All companies always have a disclaimer to release them of EVERY responsibility imaginable. Even so far as the availability and quality of products and services that the consumer is paying for. I feel that these should take responsibility for the services and products they provide and guarantee the privacy of their clientele. Furthermore, the government should adopt laws prohibiting disclaimers to ALL responsibilities. Companies and governments must take SOME responsibility. Companies or ISP’s should be responsible for the quality of services provided to the consumer as well as basic protection of these services against intrusions and malicious attacks from the Internet. If they really value their clients, they should be doing this already. Governments should take responsibility for the protection of personal information in the hands of commercial institutions, and their own, against unlawful access and piracy (by overseeing encryption standards). The government’s entire raison-d’être (reason-for-being) is to SERVE THE PEOPLE and they should be LIABLE if any misuse occurs. Period. The IETF should take responsibility for the general structure and subdivision of the Internet as well as compression standards for the Internet. Software manufacturers should take responsibility for the safe-guarding of all our private and personal information contained on our PC’s at home by halting all outgoing information. Hardware manufacturers should be responsible for the protection against manipulation of our hardware configurations such as Flash-ROM’s by Operating Systems and other programs without the use of a trusted encrypted key. Only the original manufacturer of the peripheral via an encrypted key, should be allowed to update the Flash-ROM’s.

With the push now on for Internet accessibility to everyone (in Quebec it is subsidized by the government) and the gargantuan efforts to establish e-commerce sites by all commercial enterprises, shows an alarming trend toward violation of the rights to privacy. The following are some hardware/software solutions that I propose to protect personal privacy and increase personal security for those connected to the Internet.

Hardware-based Components

I propose that at least 3 hardware components be incorporated onto computer motherboards. Encryption (Security Chip) and compression encoders and decoders (Compression Chip) along with USER-ACTIVATED access control (Censor Chip) chips (to control children's viewing content) should be implemented on PC motherboards with a Flash-ROM for each. With each machine's login to their ISP, the newest versions of the strongest encryption and compression algorithms are automatically "downloaded" to the PC or "Flashed" into the ROM from the ISP. During the download, the keyboard and mouse are locked to prevent interruption to the process. The user should not be able to logon to the Internet without being completely protected against hackers and other unscrupulous persons. One more addition to the hardware platform should be a multi-purpose PCI card which contains a cellular technology-base transmitter to control the most common home media devices (or those with the most promising future) such as room lighting, thermostats, air conditioning, TV's, VTR's, Audio Systems, Satellite Receivers and inputs/outputs for Video Cameras, Digital Still Cameras, DVD's, etc.

Security Chip

The responsibility of the client's security should fall on the ISP that is providing them with the access to the Internet in the first place. The reason simply being that technology is either too complicated or too fast-changing for the average computer user to understand or follow. Users want to concentrate on the job at hand (their work) and be re-assured that no one can access their confidential and personal information.

A website or user that requires personal information from another user must explicitly ask the user for it in plain language (in the form of a pop-up window) and cannot extract this information automatically from their hard-drive or ISP. The information will then be strong-encrypted by the Security Chip to the website or other user. This eliminates the user filling out insecure forms with unknown or little known companies that may otherwise sell or communicate the user's personal information to others. Each company doing business on the Internet will be required to register with a reliable Certificate Authority (eg RSA) in order to be able to ask for the information from the user (in the form of a pop-up), otherwise they cannot.

Compression Chip

Compression algorithms are advantageous for the user and ISP. Using the latest compression technologies and algorithms, the users will benefit from faster performance for real-time media (audio, video, voice, etc) and file downloads. The ISP will benefit by knowing that all network traffic makes optimum and therefore, economical use of the available network bandwidth. For instance, downloading a large, uncompressed text file (say 3MB) through their network at even the paradigmal speed of 5600 cps would take about 9 min. A compressed version of the same file (can be compressed up to 92% smaller in some cases!) would take about 43 secs in the best of cases. Any reasonably compressible file format either sent by, or received to a user's PC, is automatically compressed or decompressed by the Compression Chip. Web administrators should insure that all files are as small as possible. This prevents users with ill-configured PC modems or software from causing bandwidth hogging at the ISP. The compression and decompression algorithms are not only for file transfer but for all media (audio, video, etc.). The information stream, both up and down, must be fully encrypted and decrypted using the Security Chip.

Censor Chip

Access control chips (Censor Chip) will automatically be activated by the user or employer if children or employees have access to the PC and want to access the Internet. As explained later in this document, the internet should be subdivided into different domains (eg .adu (adult), .tee (teen), .chi (child), .bus (business), .gov (government), .med (medical), .edu (schools), etc). The Censor Chips will automatically bar access to the adult sites for children and employees. It is much simpler to restrain access, since it merely has to block access to one domain rather than site-by-site. On logon with the ISP, the Censor Chip uploads to the ISP those domain(s) which need to be restricted for the child or employee. As an alternative, the blocking may also be done locally on the user’s PC itself.

The Censor Chip and Internet Domain Sub-Division

There is much concern over the constitutional rights governing freedom of speech and expression when it comes to censorship on the Internet. No one likes to be suppressed or censored and no one should be. Unfortunately, whenever the word "freedom" is employed, it seems to be mentally translated by certain people's minds to "anything goes". A golden rule that I follow is that "My rights end where another's rights begin". Unfortunately, this rule is very often broken or misunderstood and certainly not respected by many people.

Because of these unalienable rights, unrestricted access to all sorts of information over the Internet are easily accessible by anyone. However, there are many sites which children should not be able to access due to their adult nature. With the following proposal, everyone can "have their cake and eat it too"!

Current methods for restricting access to adult sites by both home users and businesses, involves the use of a proxy or registering with an Internet service such as Net Nanny. The limitation is that not all adult sites or material can be restricted this way. There are newsgroups that are easily accessible which these services cannot block out. Furthermore, there are new sites everyday and not all of them register with these services. There are always FTP servers and personal websites too.

The key here, lies in subdividing the Internet into specific domains. For sex sites and all adult sites for that matter, a domain can be created called .adu (for adult). The entire domain can be blocked for children rather than each individual site. If the move is made by the Internet Domain Authority to create these special domains, all adults can have free access to adult material as before but children can be easily blocked out. With Censor Chips integrated onto motherboards, a parent or employer can easily create a user account on the machine (their child or employee) and select from a list of those domains they want blocked out by the Censor chip for that user. When the child or employee logs on, with their username and password, the censor chip is activated and blocks out the desired domain(s) when they access the ISP.

Not only is subdivision practical for restricting access to a certain category of material, it is also useful for finding needed information or material much quicker than what is presently possible. How many times has someone searched the Internet using Lycos, Alta Vista, Yahoo or another search engine and got back several thousand items in their query results? If the subdivision of the Internet were more subject specific, like a directory tree, someone could go directly to the domain and/or sub domain like going through folders on their hard-drive and initiate a search from there. The returned query results would be more manageable and relevant. The next section describes the look, "feel" and proposed functionality of Operating System software with internet subdivision and convergence in mind.

Software-Based Components

Accessing or "surfing" of the Internet or an Intranet should resemble the way we work each day. Since mostly everyone owns or uses a PC (or one day will) either at home or at work, then, it makes sense that "the way we work each day" is in fact the way we work on our PC's. What I am saying is that users should have a standard GUI (say, Windows…what else) with which they can be comfortable and proficient. Everything else including the Internet should be as familiar as this GUI to the user.

Operating Systems (Windows)

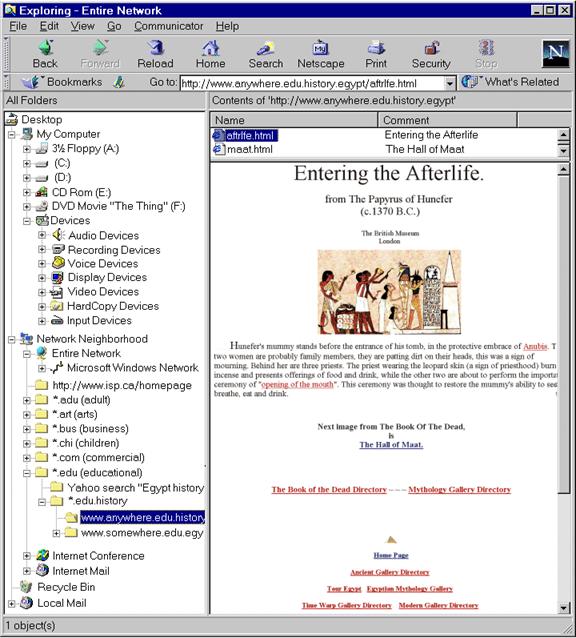

Applications that run on the Windows platform already have that standard look and feel with the "right-click" context menus and all but when surfing the Internet, they are unavailable. By creating a consistent look and feel when connected to the Internet, like the Windows GUI, a user can more confidently and easily "surf", "download", "upload" and just about anything else their heart desires. Take the example shown in Figure 1 of the Windows NT Explorer and what it would be like to surf the Net with a GUI like that one. As it stands, Microsoft is probably on the right track but going the wrong way when they introduced their active desktop. Worse yet, they did not rewrite Windows to accommodate these new features. Rather, they added another layer of software on top of the OS thus increasing its bulk, demanding more resources and slowing down its overall operation speed. They are trying to make the Windows GUI conform to the “Internet GUI” instead of the other way around. The Internet should conform, so to speak, to the Windows GUI. This can be done with minimum development and therefore, cost.

If we look at the image I constructed (Figure 1), the use of this one common interface is not only easy and familiar, it is economical and practical. The reason being that Internet browsers need all kinds of plug-ins (some at a cost) to achieve a truly multimedia Internet experience when all the functionality for video, audio, etc, is already part of the platform. Besides, there are no standards for these plug-ins. Every basement-based company on the planet, having a programmer, is trying to develop plug-ins for Internet browsers in the hopes that theirs will be adopted as "The Standard". Let's not mention all of the "exception errors" and "illegal operation errors" and others that these badly behaved programs can cause. Once again, another layer of software on top of the OS. The software needed to operate these devices is already part of the OS, so why not use it? Why add another driver or plug-in if the capabilities already exist? The only necessary adjustment would be to modify certain *.dll 's and drivers (perhaps the winsock also). Why increase a user's learning curve unnecessarily with plug-in software?

The precept should be: "Someone who can work with Windows should be able to work with anything." The whole idea of Windows was to provide a user-friendly, intuitive, easy-to-use yet powerful graphic interface for all users regardless of their technical knowledge or background. We have somehow drifted from the original idea. Strange how the acronym K.I.S.S. (Keep It Simple Stupid) is known by all but adhered to by few.

Once again, using the image in Figure 1 as a guide, let's say a user is viewing the web page shown on the right and wished to save it to their hard drive. They would simply "right-click" or "drag-and-drop" to the C: drive without the need to save all the images separately and can view the page without opening a browser! Logically speaking, there would be no other reason for dragging and dropping the page to the C: drive other than wanting to save it to disk. If they wished to print the page, they would drag and drop the page or an image onto one of their "HardCopy Devices" (e.g. Fax, Ink jet, Laser, Plotter, etc). Again, there is no other logical reason for doing this unless they wanted to print the page.

Going even further, say the user is on an Internet conference and wants to show the page to the others, he would do the same thing except he would "drop" the image into the current conference. The user would then single-click the Conference icon and bring up the application in the right-hand window replacing the Web Page and edit or add comments the Web page (if desired). Let's say an *.avi was playing in the right-hand window. If the user wanted to record it to video tape, he would again "drag-and-drop" to a home VTR under "Recording Devices". If he wanted to view the same *.avi on his living room TV or a projector in the conference room at work, he would "drag-and-drop" to his TV or projector under "Display Devices". Now, this is what I call, intuitive, even instinctive.

In the image in Figure 1, because it is an image and not an actual application, I could not include all of the aspects of the Interface. In effect, the active selection on the tree in the "left-frame" will dictate the Options and Command buttons available to the user in both the "Menu Bar" and "Tool Bar". This is because it is the active application. Every other application not in current view is automatically "Minimized" to save resources. If a user wishes to use more than one application simultaneously, he has the choice of "Tiling Horizontal", "Tiling Vertical" or "Cascading" the windows in the "right-frame" or, double-clicking on either the minimized application or the active one to open a new, full-screen, independent window as we do now (complete with the application's Menu and Tool Bar) but the Explorer portion "left-frame" will be hidden by default. By double-clicking the left border of the window it will reappear.

The highlight color of the text of the active (selected) application in the "left-frame" of the Explorer window should be GREEN). All other applications that are currently open but minimized will be highlighted in RED and TSR's, system tray and background-running applications will have their program folder or name (to be decided) text in electric BLUE with no highlight. Other colors can be used for temporary background activity (saving a large file, recording a movie, printing, copying, etc) or scheduled activity (Disk Defrag, Virus Scanning, etc).

With this kind of interface, only "context sensitive" menus, and "options dialogs" would be needed. Right, left and middle clicking of the mouse should be sufficient to access all of the properties, options and menus needed. I have not made mention of all of the other functionality and properties of the Windows GUI, but most of them, if not all, can be adapted to function with any application or device. All of these enhancements would simplify the OS and thus, lighten it and increase its speed of operation.

Figure 1

No comments:

Post a Comment